by Mara Campbell

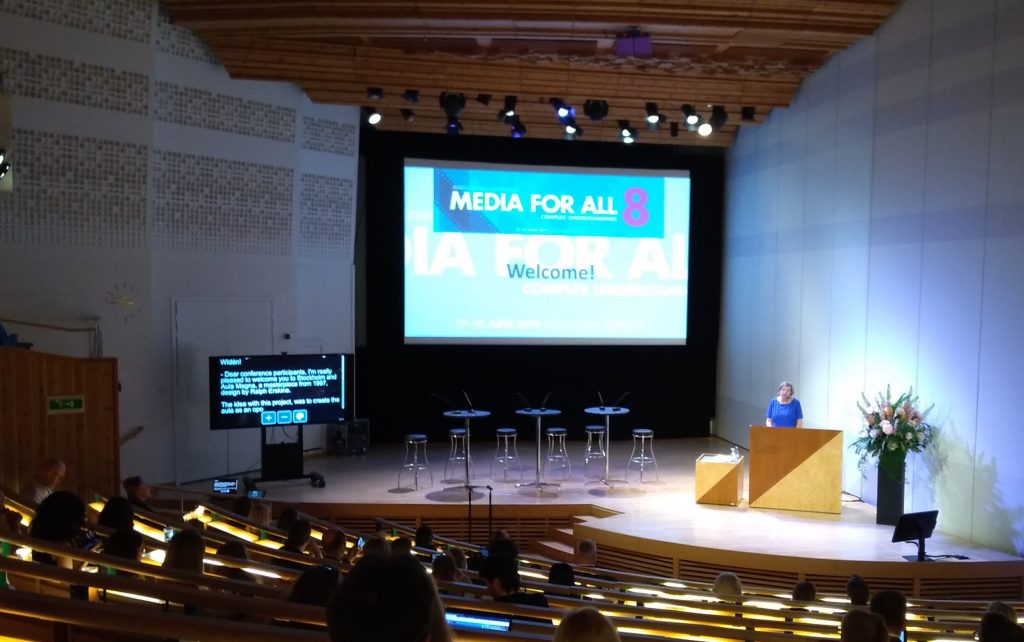

Last June, the Media for All 8 conference took place at the University of Stockholm. It was two days packed with 15 minute talks on many different subjects regarding audiovisual translation. One very interesting feature at the conference was the speech-to-text (STT) interpretation services provided. STT is basically a live transcription of everything that is said by the speakers. The deaf and hard-of-hearing members of the audience were able to follow the interpretation on a big screen in the Aula Magna, and on their own tablets, phones or computers in the other three smaller conference rooms.

Images 1 and 2: Big screen with STT interpretation to the left in the Aula Magna, main venue of the conference.

Mohammed Shakrah, founder and CEO of Svensk Skrivtolkning, the Swedish company that provided the written interpretation, shed a lot of light on this discipline. The interpreters use a regular QWERTY keyboard for their work as opposed to stenotype or Velotype keyboards. The reason for this is that training to use one of these machines takes five years, while fast typists on regular keyboards are much easier to find and faster to train. This, combined with the special abbreviation system this company devised, gives them an accuracy of about 99% in Swedish and almost as close in English. Their abbreviation method— that helps a fast typist who regularly types 160 words per minute reach between 250 and 300 words per minute—follows a certain pattern, and interpreters do not need to rely on their memory to know every single correct abbreviation, they just have to be familiar with the system.

Preparation before an event or conference also plays a crucial part in the process. Every speaker in the conference was required to submit their presentation in advance. The PowerPoint file, together with the abstract, and any other reference material they submitted was processed with a software that checks for word frequency and creates a list the most used terms and their corresponding abbreviations. This conference required the creation of 5,000 abbreviations in English, while their Swedish general abbreviation list compiles about 45,000! This system reduces the latency (the time it takes for the text to appear on screen counting from the moment the speaker said it) to a minimum.

The interpreters work in pairs. Like any oral interpreter, they generally switch with their partner after about 10 minutes. And they also give each other support with a system of signals. Borrowed from the Swedish Sign Language, they use hand and finger gestures to indicate when a speaker is reading from different sections of the presentation and the PowerPoint slide on the screen at that time. It is better to tell the user that the presenter is reading from the screen, because this gives them a chance to look at the presentation and not get fixed on the transcription. This way, they can get the full experience of the lecture. Most deaf and hard-of-hearing people at conferences have not used the service before so they don’t know how to be a “proper user.” If they focus on reading redundant information, they stop relying on their own hearing, they get stuck on the screen, and miss visual cues from the room and the presenter. The hard-of-hearing audiences might also want to take advantage of the service and measure how well they hear, so they compare what they hear with what is on the screen.

The people interested in following the presentations with the use of the STT interpretation can connect with their phone, tablet or computer. This allows them to be more invisible in the crowd and not stand out. They get the service without everyone noticing that they have special needs. The phone app has added functions, like links to Wikipedia when famous names are mentioned and metadata that expands on the text.

Image 3: A user following the STT interpretation on her tablet.

Image 4: On the left of the image, on top of the desk, notice a laptop screen that shows the STT interpretation.

Mr. Shakrah teaches courses on this discipline at a vocational school level. The university where students can get a degree in Swedish Sign Language also offers courses on STT interpretation. The course used to be three years long because it required learning how to use a Velotype keyboard, but the students did not finish the course with enough proficiency. The STT service was deemed as bad by the end users, and there were not many STT interpreters available, so the salaries for this type of scarce work were quite low. So Mr. Shakrah was asked to develop a curriculum that taught the method with a QWERTY keyboard and that could be completed in one year. In the past four years, they have trained about fifty STT interpreters. They also redefined the recruiting criteria and, instead of looking for people who were good at languages or good with people, they looked for faster typists, ideally people inclined towards video gaming, which develops multitasking abilities and speedy digitation, and proficient in both Swedish and English. The certification criteria were also raised, and it all lead to a surge in more qualified applicants. The availability of better interpreters revamped the profession and the service, and many more users started requesting it in teaching and business environments, because the accuracy was improved greatly and the latency decreased dramatically.

Nowadays, these certified professionals can easily make a good living: those good enough to work at a university level earn excellent salaries, probably twice as much as average STT interpreters, who can still make a living with their full time jobs in the industry.

Image 5: Mr. Shakrah and his fellow STT interpreter, Kevin, working on the written interpretation of one of the speakers.

Another application of this wonderful technology and knowledge is providing remote interpretation in various scenarios, be it business meetings, Skype calls, even trips. The user can connect the app with the interpreter, who will listen in on the meeting, call or the tour guide from a far away location, and type out everything being said. The deaf or hard-of-hearing person has the ability to type questions in a chat section of the app, which are, in time, spoken by the interpreter, who then types up the response from the other party.

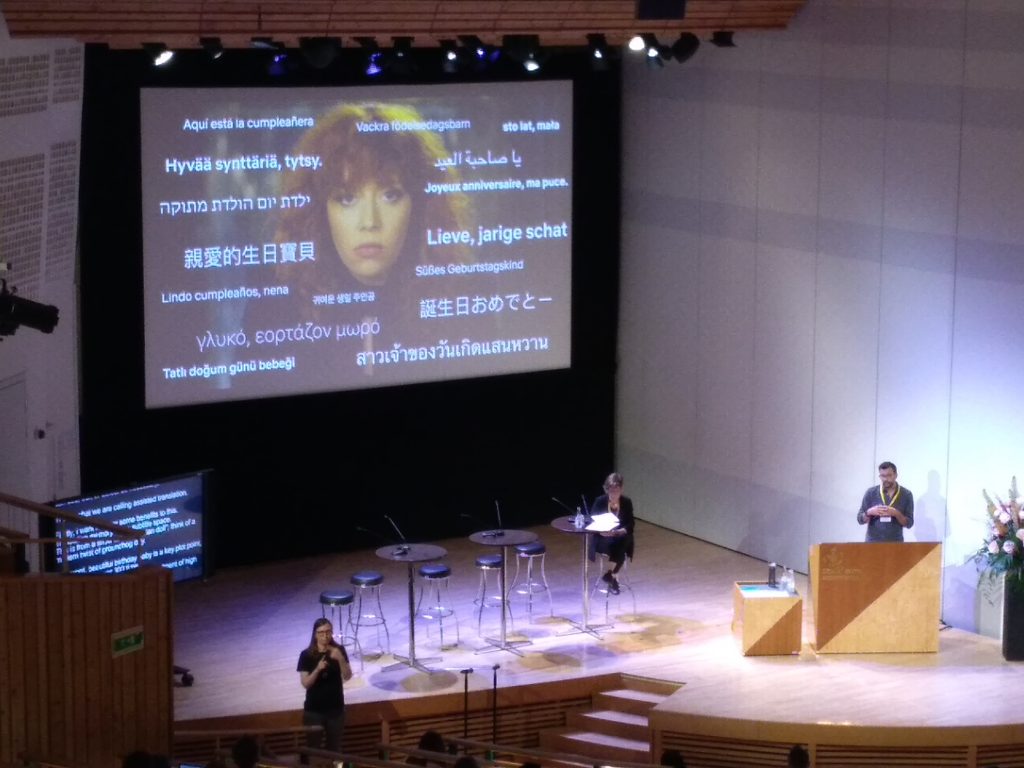

Image 5: The conference also provided Swedish Sign Language interpretation. Notice the presence of the SSL interpreter near the screen where the written interpretation is broadcast.

The AV world has so many very interesting applications and different areas in which we can implement, improve, and develop our talents and knowledge. And some of them, like speech-to-text interpretation, are of great help to people that would otherwise feel left out of some environments. Being able to assist them is definitively an added bonus for our profession.

Check out these videos of live STT interpretation:

Live interpreters at work 1 (12 seconds)

Live interpreters at work 2 (42 seconds)

Live interpretation at the Aula Magna (29 seconds)

Live interpretation + Sign Language Interpreter (17 seconds)

We thank Gabriela Ortiz for her collaboration with information that helped with the writing of this article.

Mara Campbell is an Argentine ATA-certified translator who has been subtitling, closed captioning, and translating subtitles and scripts for dubbing for the past 20 years. She worked in several of the most important companies of Argentina and the USA. She is currently COO of True Subtitles, the company she founded in 2005. Her work has been seen on the screens of Netflix, Amazon, Hulu, HBO, BBC, and many more. She teaches courses, speaks at international conferences, and is a founding member of the AVD.

Published in Deep Focus, Issue 4, September, 2019