by Stavroula Sokoli

Most professional translators, including subtitlers, always look for new ways and tools to enhance their productivity. In my case, checking the most recent advances in technology is also necessary for teaching and research. For these reasons, I decided to test AppTek’s machine translation (MT) engine specifically developed for subtitling and recently deployed by PBT-EU in their media localization management platform, Next-TT. My goal was to see whether it could be useful for producing high quality subtitles for fiction and entertainment shows, i.e. correct, readable subtitles depicting the dialogue’s register and tone.

But before we get to that, a bit of relevant background: I am a translator with more than 20 years of experience in subtitling and technical translation, but not a professional post-editor, nor have I do I have training in post-editing. However, I have used select neural machine translation (NMT) engines to enhance my productivity when translating technical texts. As for MT in subtitling, it’s been less than a year since some of my clients have implemented it on their platforms. Netflix, as announced at the Media for All 8 conference in Stockholm in 2019, presents it to linguists as a tool, named Assisted Translation.

Subtitlers working in Netflix‘s online platform, the Originator, have the option to either populate the whole subtitle file with MT suggestions and postedit them or work from scratch on an empty file and selectively use specific suggestions. For my language pair, the percentage of the MT suggestions I could use without any editing has been relatively small: 5%-10%, depending on dialogue complexity. Such a low percentage means that even reading the MT output seems counter-productive, let alone trying to post-edit it.

The news that AppTek’s MT engine, developed specifically for subtitling, was deployed in Next-TT and could now be used in Subtitle Next, sparked my curiosity. To test it, I used the film Christmas Wedding Planner, a typical rom-com where dialogue is the main aspect driving the story and character development. I used a high quality English template together with the video file to create the project in Next-TT. This involved uploading the video and the subtitle file to the platform, clicking on “Translate” and choosing my language combination in order to get the machine translated file.

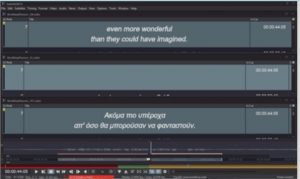

Subtitle Next does not feature a dedicated functionality for using MT yet, which means that I could either use the MT file and post-edit it or create a parallel view of three files: the English template, my translation and the MT file. I went for the second option, even though it was not convenient for copying and pasting machine translated segments/subtitles, because I initially thought there wouldn’t be much copy-pasting.

Three-file view in Subtitle Next: original template, target file, MT file

Turns out I was wrong. The dialogue included several “straightforward” lines, for which the MT engine provided an excellent result, i.e. that I could use unchanged, including line breaks and punctuation. Example 1 is one of those cases.

Example 1: Unchanged MT suggestion is a literal but successful rendering.

In total, I was able to use almost one third of the proposed MT subtitles, i.e. 393 out of 1,225 subtitles, without modifying them. Many of them were simple greetings, negations or affirmations, but there were pleasant surprises such as the successful treatment of omission as shown in Example 2. In this example, the discourse marker “Okay, see” has been omitted in the MT, which I would also omit.

Example 2: Unchanged MT suggestion: Successful omission

Example 3 involves successful line division, according to grammar rules, taking into consideration the character-per-line limit. It has to be noted that the line division is not dependent on the English template, which consists of only one line.

Example 3: Unchanged MT suggestion: Successful line division

Apart from the expected literal but successful renderings, there were also effective MT suggestions for idiomatic expressions, for which a literal translation would be incomprehensible. In Example 4, a literal translation of “grab a bite” in Greek would make no sense, whereas the expression “pinch something” suggested by the MT system is one a native Greek speaker would use. “Starve” is also correctly rendered.

Example 4: Unchanged MT suggestion: Successful idiomatic renderings

Apart from that, there were 428 suggestions (a bit more than one third) that I could use parts of, i.e. with changes involving a few key-strokes, like deleting text and punctuation, editing a single word, or adding a space. This includes cases where I would use one of the two lines, as in Example 5. This is an interesting example, because the Greek MT text has been condensed in an acceptable, but not perfect way.

The words “you think” and “of course” have been omitted, resulting in a subtitle within the character-per-second limits, with the kind of omission a subtitler would normally perform to keep within reading speed limits. But a more effective solution would be to further condense the first line and include “of course” in the second.

Example 5: Lightly post-edited MT suggestion

A total of 404 boxes were unusable (there was an abundance of examples worthy of becoming internet memes), either because no part of the text could be used or because the time needed to edit them would be longer than the time to type the translation anew. The threshold of the number of changes – or keystrokes – needed to render an MT suggestion useless is hard to establish, but deciding whether to use a part of a text or not can be time-consuming in itself. Establishing such a threshold is quite personal too, as it depends on specific skills, such as fast typing or touch-typing. If the subtitler can type very fast, it might be easier to write everything from scratch than to click multiple times on different words to even slightly edit them. In any case, unusable MT suggestions included the types of errors that I had also encountered in my previous experience with MT: literal translations that ranged from non-fluent to nonsensical, gender errors affecting several words (Greek is a highly inflected language), and issues involving formality as Greek has two levels of formality (like Spanish or German) whereas English has only one. One of the aspects heralded as an advantage of MT, namely consistency, was absent in the MT file. One of the most striking examples was the word “scone” which was rendered in three different ways: a) with a word sounding like scone in Greek, but meaning something entirely different (σκονάκι /skonaki/, a word with various definitions, ranging from “small dose of drug” to “cheat sheet”); b) another type of food which was inappropriate for the context (λουκάνικο which means “sausage”); c) left untranslated. This lack of consistency could be considered an asset as sometimes different translations may spark the subtitler’s imagination and trigger creative mental processes.

This piece is not about what MT is, how engines are trained or other aspects relating to the ways its use affects professional subtitling, such as prices and possible long-term effects caused by the increased use of MT in subtitling. Rather, I have tested a specific MT engine as a tool to find out whether it could be useful. Given the high number of ready-to-use subtitles, the conclusion is that yes, Apptek’s engine can accelerate the subtitling process and increase productivity in certain cases, depending on the type of dialogue, while maintaining high quality at the same time. Of course, the engine itself is not enough, its proper integration in the subtitling tool is also necessary, like being able to insert an MT suggestion with a touch of a button. Copying and pasting suggestions or having to post-edit each one slows down the process. As with all tools, if not used properly, it may result in a decrease in quality. Improper use includes utilizing unedited non-fluent solutions that fail to portray the register and tone of the characters, also known as the “good enough” approach. Prior experience in subtitling is needed in order to exploit the advantages offered by MT to the maximum, while avoiding the pitfalls.

Stavroula Sokoli, PhD, is a researcher in Audiovisual Translation with more than 25 publications on the subject. She has coordinated several international and national-funded research projects, managing international teams of software engineers, designers, academics and educational experts. She has extended experience working in Higher Educational Institutions in Greece and Spain, both in audiovisual localization and language education. As a subtitling practitioner she has translated numerous films, series and documentaries since 2001. More at: https://www.linkedin.com/in/sokoli/